Document Library

Test Efficiency

Request Consult

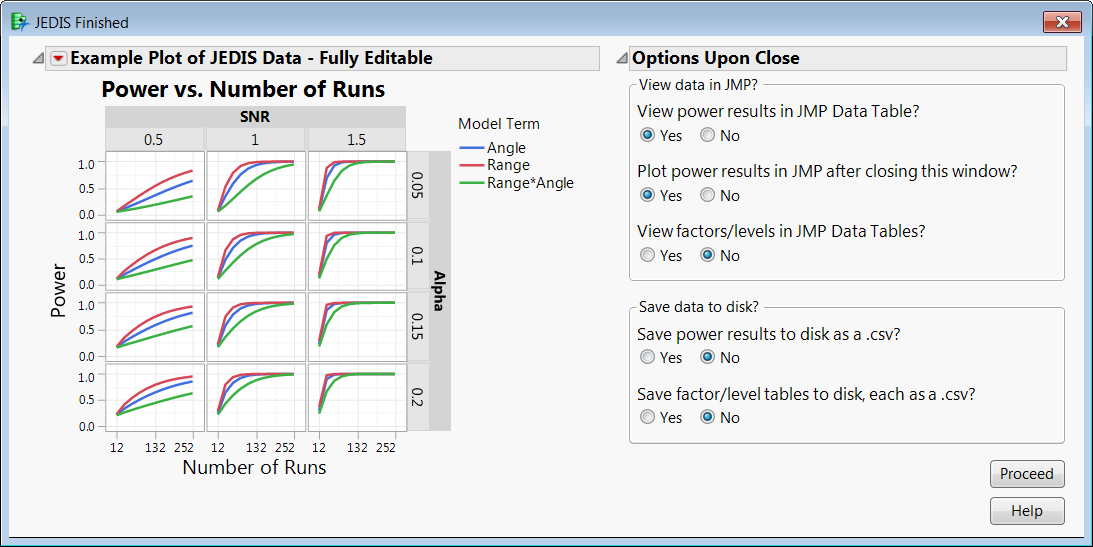

Test Efficiency measures help researchers determine the best way to use available resources. One way to achieve this efficiency is by using Design of Experiment (DOE) best practices. Best practices outline how to identify test objectives, select outcome measures, and select factors that affect outcomes. Additionally, if testers want to use the results of one test to inform the design of a new test, they can incorporate sequential testing techniques which have slightly different definitions and approaches depending on the scenario. The Test Science team explores available variations of DOE methods and ways to implement them in defense tests.

Modeling & Simulation

Request Consult

Modeling & Simulation (M&S) is defined as using a representation of a system (a model) in which tests (simulations) can be run to gain information on the real system. Weapon system evaluations are becoming increasingly more reliant on M&S to supplement live testing. In order to have valuable supplements, testers must understand how well these models represent the simulated systems or processes by quantifying uncertainty in the M&S results. The burgeoning research field of Uncertainty Quantification includes concepts such as validation, calibration, and discrepancy modeling. The Test Science Team researches methodologies for applying these concepts to M&S with the goal of improving weapons systems.

Human-System Interaction

Request Consult

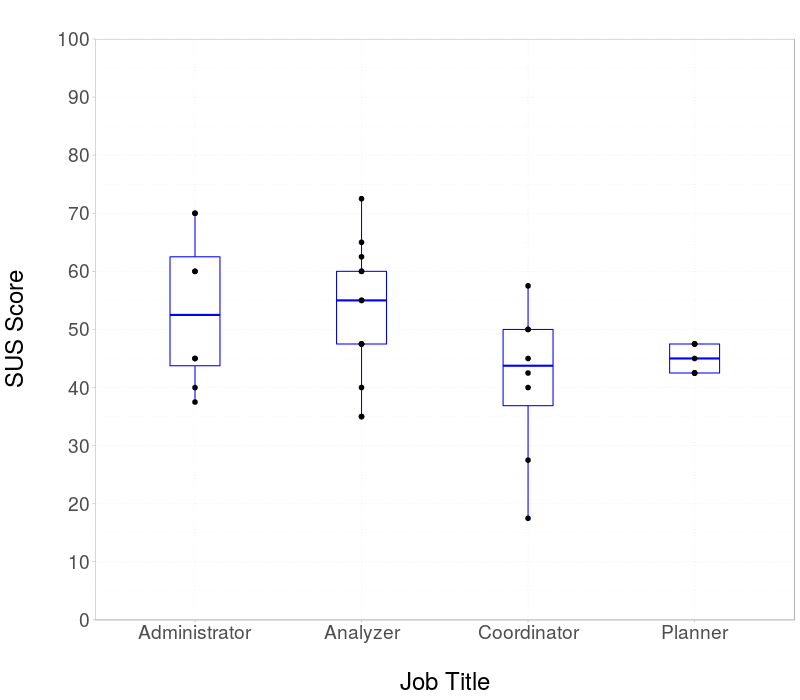

Human-Systems Interaction (HSI) research investigates why and how to improve user engagement with systems that now include artificial intelligence, robotic teammates, and augmented reality. Improvements in technology, engineering, and cyberspace have led to the development of complex, feature-rich, capable systems, which have changed the ways humans interact with the more advanced systems. The cost of this complexity can range from failure to fatalities. The Test Science Team applies HSI across the DoD, including surveying, developing best practices for metric analysis, creating new methods for evaluation, and designing statistical models for assessing human-machine teams (HMT).

Autonomy & AI Enabled Systems

Request Consult

Autonomy, in this context, is defined as systems that can perform tasks with no external influence. As technology advances, researchers including the DoD are becoming more interested in how machines can perform without the aid of humans. The methodologies that the DoD employs for testing standard systems have a high likelihood of mischaracterizing risk and performance when analyzing this emerging technology. Autonomy research includes concepts such as environment perception, decision making, operation, and ethics. The Test Science Team is working to develop a framework for testing autonomous or AI-enabled systems.

Demystifying the Black Box: A Test Strategy for Autonomy